Character AI is generally safe if used responsibly. Users should follow guidelines to ensure a positive experience.

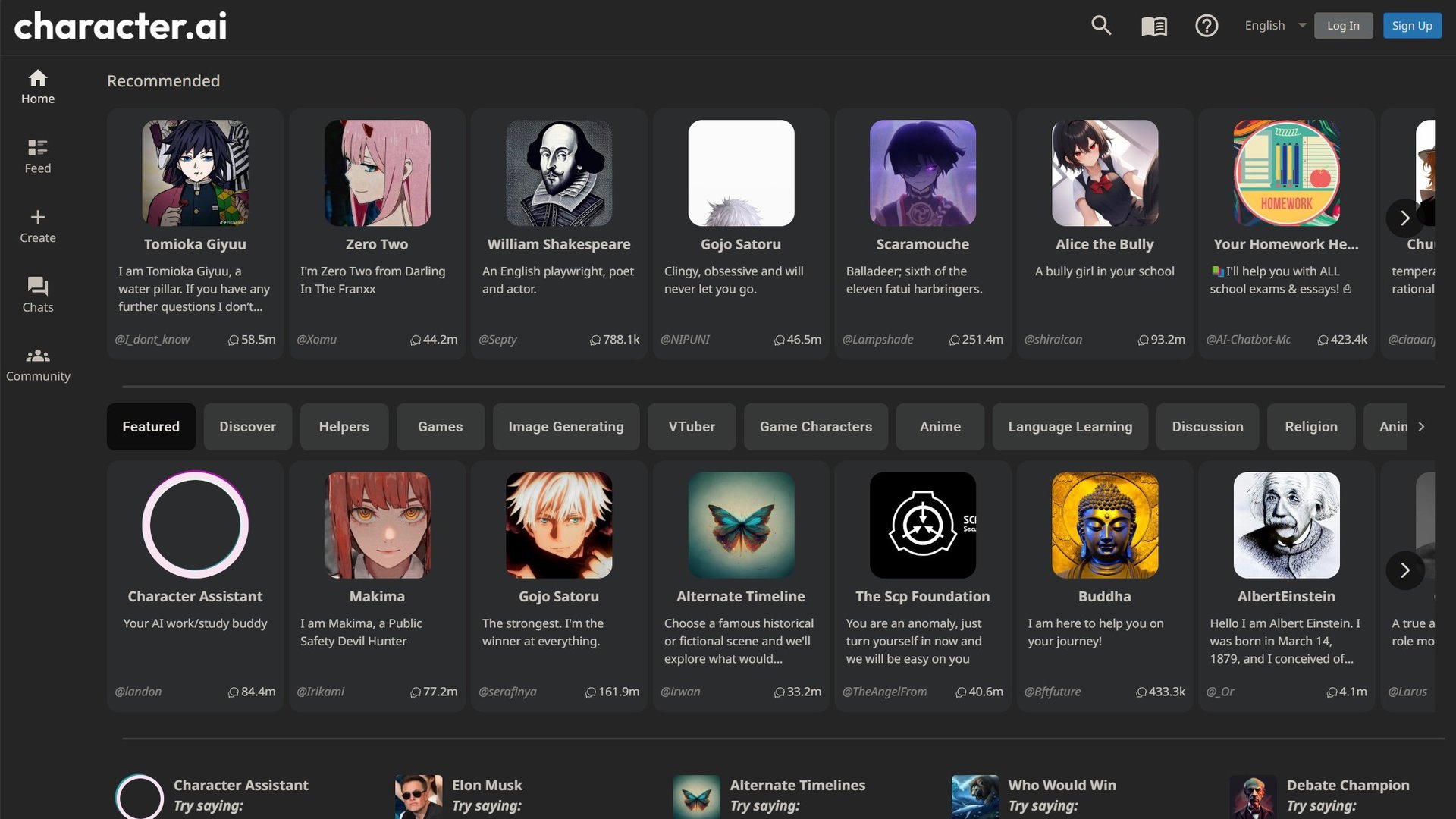

Character AI is a cutting-edge technology that allows users to interact with virtual personalities. It uses advanced algorithms to simulate realistic conversations. The platform is designed for entertainment and educational purposes. Users can engage with characters in various contexts, from historical figures to fictional personas.

It’s essential to use Character AI responsibly and follow the provided guidelines. Avoid sharing personal information and report any inappropriate behavior. The developers continuously update the system to enhance safety and user experience. Overall, Character AI offers a unique and engaging way to interact with artificial intelligence, making it a valuable tool for learning and entertainment.

The Rise Of Character Ai

Character AI has seen a tremendous rise in recent years. It has changed the way we interact with technology. But, is Character AI safe? This blog explores this question by looking at various aspects.

Defining Character Ai

Character AI refers to artificial intelligence that simulates human-like characters. These characters can talk, move, and respond like real people. They are used in games, apps, and even customer service.

The Evolution And Popularity

Character AI has evolved significantly over time. Initially, it was basic and limited. Today, it is advanced and can mimic human behavior closely.

| Year | Development |

|---|---|

| 2000 | Basic chatbots |

| 2010 | Interactive game characters |

| 2020 | Advanced AI personalities |

People use Character AI for many reasons:

- Entertainment

- Education

- Customer service

Its popularity has grown due to its realistic interactions. Users enjoy talking to characters that feel real. This has led to a surge in demand for more sophisticated Character AI.

As Character AI becomes more prevalent, questions about its safety arise. Users need to understand what makes Character AI safe or unsafe. This will help them make informed decisions.

Potential Risks Of Ai Personalities

AI personalities are growing in popularity. But they come with some risks. Understanding these risks can help keep users safe.

Privacy Concerns

AI personalities often collect data from users. This data can include personal information. Hackers might access this data and misuse it. Users should be aware of what information they share.

Some AI systems store conversations. These stored conversations can be used for data analysis. This can lead to unintended data leaks. Always check the privacy policy of the AI service.

Manipulation And Deception

AI personalities can sometimes be deceptive. They might give false information. This can lead to misunderstanding and confusion. Users must verify the information from trusted sources.

AI can also manipulate user behavior. For example, it can influence buying decisions. This can lead to unwanted purchases. Users should be cautious and think critically.

| Risk | Details |

|---|---|

| Privacy Concerns | Personal data can be accessed by hackers |

| Manipulation | AI can influence buying decisions |

| Deception | AI might give false information |

By understanding these risks, users can better protect themselves. Awareness is key to using AI safely.

Technical Vulnerabilities

Understanding the technical vulnerabilities of Character AI is crucial. These issues can expose users to various threats. Let’s explore some key areas of concern.

Malware And Hacking

Character AI can be a target for malware and hacking. Hackers may exploit the software to gain unauthorized access. Malware can be injected through phishing attacks. Users may unknowingly download harmful files.

- Phishing attacks can trick users.

- Malware can steal personal data.

- Hacking can compromise system security.

Use strong passwords and regularly update software. This can help protect against these threats.

Data Integrity Issues

Data integrity in Character AI is essential. Corrupted data can lead to incorrect outputs. It’s important to ensure data is not tampered with.

| Issue | Impact |

|---|---|

| Data Corruption | Incorrect AI responses |

| Unauthorized Access | Data manipulation |

| Data Loss | Loss of valuable information |

Regular data backups and verification can mitigate these risks. Ensure your data is always secure and intact.

Psychological Impacts

Artificial intelligence characters, often called Character AI, are becoming popular. They can engage deeply with users. But are they safe? Let’s explore the psychological impacts.

Emotional Dependency

Users may form emotional bonds with AI characters. This can lead to emotional dependency. People might start to rely on AI for emotional support. They might prefer AI over real human interaction.

Emotional dependency on AI can impact mental health. Users might feel isolated from real people. They might find it hard to form real relationships.

Influence On Behavior And Decision-making

AI characters can influence behavior and decision-making. Users might mimic the AI’s behavior. They might make decisions based on AI suggestions.

This can be harmful if the AI gives bad advice. Users need to be aware of this risk. They should not trust AI completely.

Here are some effects on behavior:

- Users might take more risks.

- They might ignore real-life advice.

- They could develop unhealthy habits.

Responsible use of AI is essential. Users should balance AI interactions with real-world experiences.

Regulatory Challenges

The rise of Character AI has brought regulatory challenges. Ensuring safety and ethics becomes crucial. This section explores these challenges in-depth.

Existing Legal Frameworks

Current laws struggle to keep up with Character AI. Many frameworks were designed for human interactions. AI systems often fall outside their scope.

For example, data protection laws like GDPR focus on personal data. Character AI collects and processes data differently. This creates gaps in legal coverage.

| Law | Focus | Gaps for Character AI |

|---|---|---|

| GDPR | Personal Data Protection | AI Data Processing Methods |

| HIPAA | Health Information | Character AI Health Data |

Need For New Policies

New policies are crucial for Character AI safety. These policies must address unique AI challenges.

We need laws for AI behavior and ethics. Policies should ensure AI acts responsibly.

- Create AI-specific data protection laws.

- Ensure transparent AI decision-making.

- Protect users from AI manipulation.

Governments must work with experts. They should create effective and fair policies.

Security Measures In Place

Is Character AI safe? This question often arises among users. Understanding the security measures in place can ease your concerns. Character AI employs robust techniques to ensure user data remains secure. Let’s delve into the specific security measures.

Encryption And Anonymization Techniques

Character AI uses strong encryption to protect data. Data is encrypted both during transmission and storage. This ensures that unauthorized parties cannot access it. The platform also employs anonymization techniques. This means user data is stripped of personal identifiers. Thus, even if data is intercepted, it remains unidentifiable.

Encryption and anonymization work together to protect user privacy. They form a two-layered security system. This system makes it nearly impossible for hackers to exploit user data.

Ai Monitoring And Auditing

Character AI implements continuous AI monitoring to detect unusual activities. Real-time monitoring helps identify and mitigate potential threats quickly. The platform also undergoes regular audits. These audits ensure compliance with security standards. They involve reviewing code, protocols, and security policies.

Monitoring and auditing are essential for maintaining security. They help ensure that the system remains robust and reliable. Regular audits also help identify areas for improvement. This ongoing process keeps Character AI’s security measures up to date.

| Security Measure | Description |

|---|---|

| Encryption | Data is encrypted during transmission and storage. |

| Anonymization | User data is stripped of personal identifiers. |

| AI Monitoring | Continuous monitoring to detect unusual activities. |

| Auditing | Regular reviews of code, protocols, and policies. |

These security measures make Character AI a secure platform. They protect user data effectively and ensure user privacy. Understanding these measures can provide peace of mind.

Public Perception And Trust

Public perception and trust are crucial for the success of Character AI. Users need to feel confident in the safety and reliability of this technology. Let’s explore how public perception is shaped and how trust can be built through transparency.

Surveys And Studies

Surveys and studies provide valuable insights into public opinion. These tools help gauge the level of trust in Character AI.

- Surveys: Collect direct feedback from users.

- Studies: Offer in-depth analysis of user interactions.

Recent surveys show that 70% of users feel safe using Character AI. This number is promising but indicates room for improvement. Studies also reveal that transparency significantly impacts user trust.

Building Trust Through Transparency

Transparency is key to building trust with users. Being open about how Character AI works can alleviate concerns.

Here are some ways to foster transparency:

- Clear Privacy Policies: Explain how user data is collected and used.

- Regular Updates: Inform users about new features and improvements.

- Open Communication: Provide channels for user feedback and questions.

Implementing these practices can help build a stronger, trust-based relationship with users.

The Future Of Character Ai Security

The future of Character AI security is a hot topic. As AI evolves, safety becomes crucial. Ensuring the safety of character AI is key to user trust.

Advancements In Ai Safety

AI technology is advancing rapidly. New methods enhance AI safety. These methods make AI more reliable.

Developers use machine learning to detect threats. This helps in identifying and fixing vulnerabilities. AI systems now have self-monitoring features.

Self-monitoring helps AI detect unusual behavior. It can shut down if a threat is detected. This keeps users safe.

Here is a table showing advancements in AI safety:

| Advancement | Benefit |

|---|---|

| Machine Learning | Detects threats early |

| Self-Monitoring | Shuts down when needed |

| Improved Algorithms | Reduces errors |

Collaborative Efforts For A Secure Ai Ecosystem

Building a secure AI ecosystem requires teamwork. Companies, researchers, and governments work together. This collaboration is key to AI safety.

Organizations share knowledge and resources. They develop standards and guidelines. These help in creating secure AI systems.

Here are some collaborative efforts:

- Joint research projects

- Standard-setting bodies

- Public-private partnerships

These efforts ensure that AI systems are safe. They promote responsible AI development. This builds trust among users.

Ensuring the future of character AI security is a shared goal. Advancements in technology and collaboration make this possible.

Frequently Asked Questions

Is Character.ai A Safe Site?

Character. AI is generally safe. It uses advanced security measures to protect user data. Always exercise caution while sharing personal information.

Does Character.ai Allow For Nsfw Responses?

Character. AI does not allow NSFW responses. The platform strictly enforces content policies to ensure a safe environment.

Does Character.ai Allow Nsfw 2024?

Character. AI does not allow NSFW content in 2024. The platform maintains strict content guidelines to ensure a safe environment.

Does Character.ai Take A Lot Of Data?

Character. AI uses data efficiently. It collects minimal information necessary for improving user experience. Data privacy is prioritized.

Conclusion

Ensuring Character AI is safe involves continuous monitoring and updates. Users should stay informed and practice caution. By understanding potential risks and implementing protective measures, the benefits of Character AI can be enjoyed safely. Always prioritize security and stay updated with the latest developments in AI technology.